The Too Good To Be True Filter

This blog post was originally published on the Datacratic blog, which is now unavailable.

At Datacratic, one of the product we offer our customers is our real-time bidding (RTB) optimisation that can plug directly into any RTBKit installation. We’re always hard at work to improve our optimisation capabilities so clients can identify valuable impressions for their advertisers. Every bid request is priced independently and real-time feedback is given to the machine learning models. They adjust immediately to changing conditions and learn about data they had not been exposed to during their initial training. This blog post covers a strange click pattern we started noticing as we were exploring optimized campaign data, and a simple way we can use to protect our clients from it.

Optimizing a cost-per-click campaign

Assume we are running a campaign optimized to lower the cost-per-click (CPC). The details of how we optimise such a campaign are beyond the scope of this post, but in a nutshell, we train a classifier that tries to separate bid requests based on the likelihood that they will generate a click, assuming we win the auction and show an impression. Our models are naturally multivariate, meaning they learn from many contextual features present in the bid request, as well as any 3rd party information that is available.

One simple and highly informative feature used by the model, the feature this post is about, is the site the impression would be shown on. From a modeling perspective, this roughly translates to asking if the target audience for your campaign visits that site. For example, for an airline campaign, there are surely more potentially interested visitors on a website about travel deals than on an unrelated site like the website of a small guitar store. This can be captured in many ways, one of which is by empirically looking at the ratio of clicks that were generated over the number of impressions purchased on the site. This ratio can be used by the model to estimate the probability that showing an impression on a particular site will result in a click.

Assume the model buys a couple hundred impressions on a site, say AllAboutClickFarms.com, and gets an unusually high number of clicks. It will soon start thinking that the probability of getting a click on that site is extremely good. If you recall how we calculate our bid price, our model bids higher as the probability of click increases. Because of our higher bids, the model will win more and more auctions for impressions on that site, which will probably generate lots of clicks and will in turn increase our certainty that AllAboutClickFarms.com is truly a rich source of fresh clicks. This creates a spiral that can make us to spend a significant amount of money on that site. You might wonder what the problem is. Our goal is to lower the CPC and we have found a site on which we get very cheap clicks. So we should be spending a big portion of our budget on it and the advertiser will be thrilled. Possible, but consider the following.

On average, the clickthrough rate (CTR, ratio of clicks/impressions) for an untargeted campaign is about 0.06%. This mean if you randomly buy impressions at a fixed price, you’ll have to show about 10,000 ads for 6 people to click on your ad. With an optimised model that bids in a smart way, we can easily get around twice as many clicks for the same amount of impressions. But on some sites, we’ve seen CTRs as high as 2%, which is 33 times better than the baseline. To us, that seems a little strange. It gets even stranger when you take a look at those sites and realise that they mostly have very low-quality content.

We believe this is an example of one of the big challenges of the online advertising industry: fraudulent clicks. This problem only grows in the RTB world where machine learning algorithms are used to optimise campaigns. Simple models that only go after cheap clicks can be completely fooled and waste a lot of money by buying worthless impressions that generate fraudulent clicks. The end of campaign report might look great CPC-wise but in reality, the advertiser didn’t really get a good ROI.

A simple first-step solution to this problem is what we call the Too Good To Be True filter. Since most sites generating suspiciously high amounts of clicks are a little too greedy, they really stand out in the crowd with their incredibly high CTR. By blacklisting sites that have a CTR that is simply too good to be true relative to the other sites for the same campaign, we are able to ignore a lot of impressions that will probably lead to fraudulent clicks. This saves us money that we can then spend on better impressions, improving the campaign’s true performance.

Finding suspicious sites

To find suspicious sites for a given campaign, we first need to get an idea of what seems to be a normal CTR for that campaign.

We model a site’s CTR as a binomial random variable, where trials are impressions and successes are clicks. Since the distribution of sites for a campaign has very long tail, we typically have shown only a small number of impressions on most sites. This has the consequence of making our estimation of those sites’ CTR very bad, and possibly incredibly high. Imagine a site on which we’ve shown 100 impressions and, by pure chance, got 5 clicks. This gives us a CTR of 5%, 2 orders of magnitude higher than normal. Do we think the true CTR of that site is 5%? Not really. To account for the frequent lack of data, we actually use the lower bound of a binomial proportion confidence interval, which gives us a pessimistic estimation of the CTR, so that sites with a low number of impressions get a reasonable CTR.

We then compute the mean and standard deviation of the CTR for all our sites. Any site whose CTR is more than n standard deviations higher than the mean is treated as an outlier. For us, an outlier is a site whose CTR is too good to be true and is probably generating fraudulent clicks. So we blacklist it.

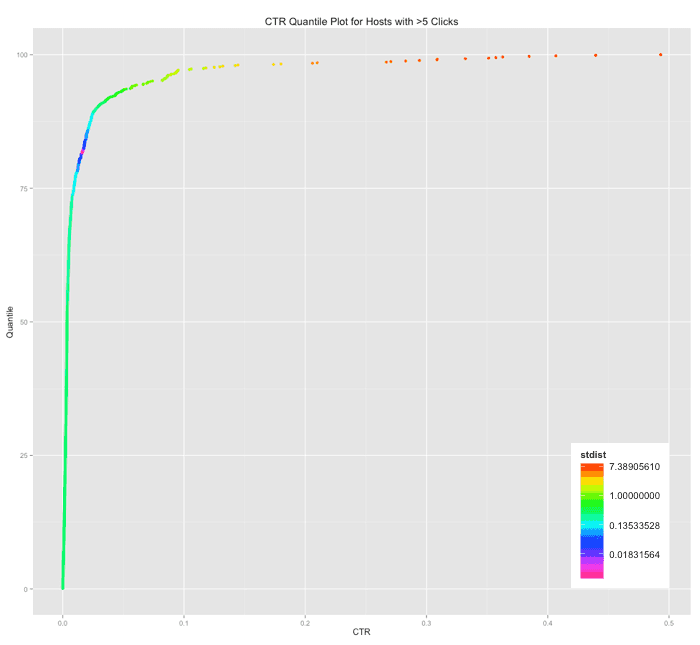

To visualise what we mean by outlier, consider the following graph that shows the distribution of sites by CTR quantile for an actual campaign. The x-axis is CTR, the y-axis is the quantile and each point is colored by the number of standard deviations it is from the mean. Remember the CTR is actually the lower bound of the confidence interval, meaning the sites in the top right quadrant have lots of impressions and are generating a massive amount of clicks. They are without a doubt outliers.

In this CTR quantile plot of sites for an actual campaign, the vast majority of sites aren’t much more than 1 standard deviation away from the mean. The exceptions are the sites with extremely high CTRs in yellow and red at the top.

Blacklisting a site is a big deal because we are in essence blacklisting the best sites. To make sure we don’t hurt the campaign’s performance by ignoring legitimiate sites, we need to stear clear of false positives. As an added precaution, we added an intermediary step for the sites whose CTR would be considered blacklistable but that have a very low number of impressions. Since we don’t have enough impressions to be confident about their true CTR, we consider them as being suspicious and put them on a grey list. While on that list, we only bid a fraction of the value we think impressions have for that site. Our reasoning is that we want to get a better estimation of those sites’ CTR, but we’re only willing to do it cheaply because they looks suspicious. That way, we can start saving our costumers’ money even earlier by paying less to increase our confidence those sites are generating fraudulent clicks.

Note that we do not need a kind of Too Bad To Be Worth Anything filter that would blacklist under performing sites. That behaviour is implicit in the economic modeling we do and our bid price will reflect that the probability of a click on those sites is almost zero. On the other hand, the TGTBT filter is necessary for over performers because they are exploiting the modeling process by falsly appearing as the best deal in town.

What we caught in our net

We take a peek now and then at what the TGTBT filter catches. Below is a series of screenshots of sites that were all blacklisted around the same time. They all had incredibly high CTRs and oddly enough, all look the same.

As we said earlier, we establish what is TGTBT on a campaign level because not all campaigns perform the same. That is simply because they are each going after different audiences, have different creatives, etc. But a site that generates fraudulent clicks will do it regardless of the campaign. That is why we allow models to warn each other that a particular site has been flagged. When a model gets a warning from another, it starts blacklisting that bad site as well. Early warnings save us from wasting money because we don’t have to re-establish on a campaign basis that a site should be avoided.

As with everything else we do at Datacratic, all the statistics required to determine if a site is a source of fraudulent clicks as well as the knowledge sharing between campaigns is done in real-time. With these simple improvements, our models won’t be fooled by click fraud because they are now fully aware that if a site looks too good to be true, it probably is.