Hacking an epic NHL goal celebration with a hue light show and real-time machine learning

See media coverage of this blog post.

In Montréal this time of year, the city literally stops and everyone starts talking, thinking and dreaming about a single thing: the Stanley Cup Playoffs. Even most of those who don’t normally care the least bit about hockey transform into die hard fans of the Montréal Canadiens, or the Habs like we also call them.

Below is a Youtube clip of the epic goal celebration hack in action. In a single sentence, I trained a machine learning model to detect in real-time that a goal was just scored by the Habs based on the live audio feed of a game and to trigger a light show using Philips hues in my living room.

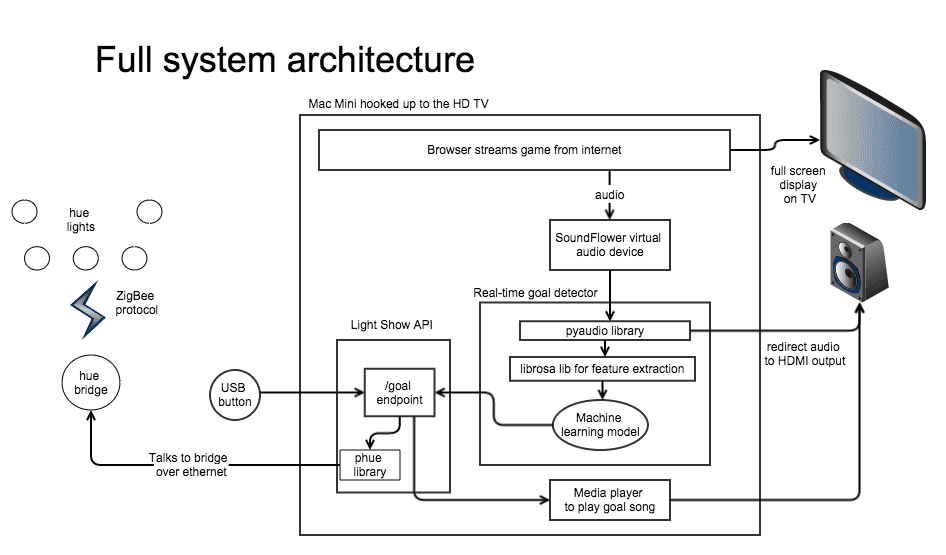

The rest of this post explains each step that was involved in putting this together. A full architecture diagram is available if you want to follow along.

The hack

The original goal (no pun intended) of this hack was to program a celebratory light show using Philips hue lights and play the Habs’ goal song when they scored a goal. Everything would be triggered using a big Griffin PowerMate USB button that would need to be pushed by whoever was the closest to it when the goal occurred.

That is already pretty cool, but can we take it one step further? Wouldn’t it be better if the celebratory sequence could be triggered automatically?

As far as I could find, there is no API or website available online that can give me reliable notifications within a second or two that a goal was scored. So how can we do it very quickly?

Imagine you watch a hockey game blindfolded, I bet you would have no problem knowing when goals are scored because a goal sounds a lot different that anything else in a game. There is of course the goal horn, if the home team scores, but also the commentator who usually yells a very intense and passionate ”GOOOAAAALLLLL!!!!!“. By hooking up into the audio feed of the game and processing it in real-time using a machine learning model trained to detect when a goal occurs, we could trigger the lights and music automatically, allowing all the spectators to dance and do celebratory chest-bumps without having to worry about pushing a button.

Some signal processing

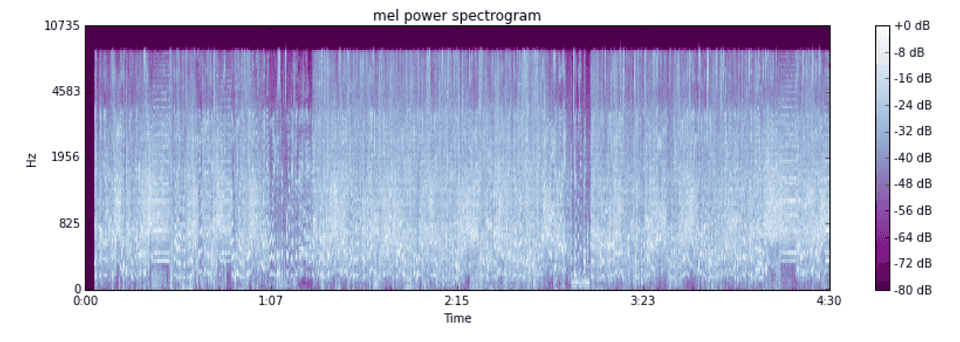

The first step is to take a look at what a goal sound looks like. The Habs’ website has a listing of all previous games with ~4 minutes video highlights of each game. I extracted the audio from a particular highlight and used librosa, a library for audio and music analysis, to do some simple signal processing. If you’ve never played with sounds before, you can head over to Wikipedia to read about what a spectrogram is. You can also simply think of it as taking the waveform of an audio file and creating a simple heat map over time and audio frequencies (Hz). Low-pitched sounds are at the lower end of the y-axis and high-pitched sounds are on the upper end, while the color represents the intensity of the sound.

We’re going to be using the mel power spectrogram (MPS), which is like a spectrogram with additional transformations applied on top of it.

You can use the code below to display the MPS of a sound file.

| # Mostly taken from: http://nbviewer.ipython.org/github/bmcfee/librosa/blob/master/examples/LibROSA%20demo.ipynb | |

| import librosa | |

| import matplotlib.pyplot as plt | |

| # Load sound file | |

| y, sr = librosa.load("filename.mp3") | |

| # Let's make and display a mel-scaled power (energy-squared) spectrogram | |

| S = librosa.feature.melspectrogram(y, sr=sr, n_mels=128) | |

| # Convert to log scale (dB). We'll use the peak power as reference. | |

| log_S = librosa.logamplitude(S, ref_power=np.max) | |

| # Make a new figure | |

| plt.figure(figsize=(12,4)) | |

| # Display the spectrogram on a mel scale | |

| # sample rate and hop length parameters are used to render the time axis | |

| librosa.display.specshow(log_S, sr=sr, x_axis='time', y_axis='mel') | |

| # Put a descriptive title on the plot | |

| plt.title('mel power spectrogram') | |

| # draw a color bar | |

| plt.colorbar(format='%+02.0f dB') | |

| # Make the figure layout compact | |

| plt.tight_layout() |

This is what the MPS of a 4 minutes highlight of a game looks like:

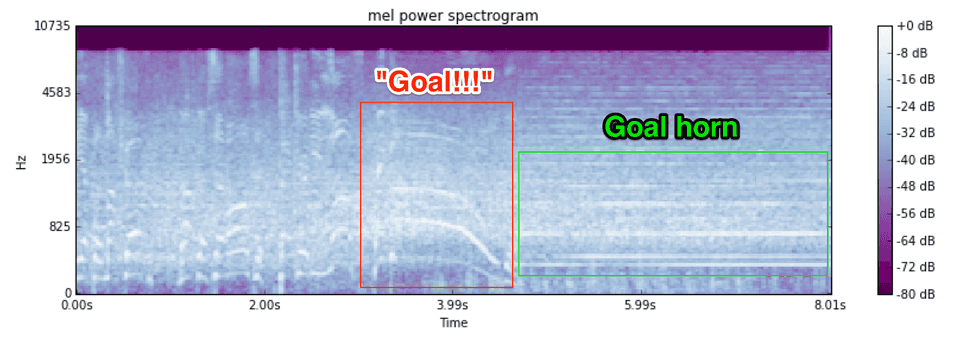

Now let’s take a look at an 8 seconds clip from that highlight, specifically when a goal occurred.

As you can see, there are very distinctive patterns when the commentator yells (the 4 big wavy lines), and when the goal horn goes off in the amphitheater (many straight lines). Being able to see the patterns with the naked eye is very encouraging in terms of being able to train a model to detect it.

There are tons of different audio features we could derive from the waveform to use as features for our classifier. However, I always try to start simple to create a working baseline and improve from there. So I decided to simply vectorize the MPS, which was created by using 2 second clips with frequencies up to 8KHz with 128 Mel bands at a sampling rate of 22.5KHz. The MPS have a shape of 128x87, which results in a feature vector of 11,136 elements when vectorized.

The machine learning problem

If you’re not familiar with machine learning, think of it as building algorithms that can learn from data. The type of ML task we need to do for this project is binary classification, which means making the difference between two classes of things:

- positive class: the Canadiens scored a goal

- negative class: the Canadiens did not score a goal

Put another way, we need to train a model that can give us the probability that the Canadiens scored a goal given the last 2 seconds of audio.

A model learns to perform a task through training, which is looking at past examples of those two classes and figuring out what are the statistical regularities in the data that allow it to separate the classes. However, it is easy for a computer to learn things by heart. The goal of machine learning is producing models that are able to generalize what they learn to data they have never seen, to new examples. What this means for us is that we’ll be using past games to train the model but what we obviously want to do are predictions for future games in real-time as they are aired on TV.

Building the dataset

As with any machine learning project, there is a time when you will feel like a monkey, and that is usually when you’re either building, importing or cleaning a dataset. For this project, this took the form of recording the audio from multiple 4 minute highlights of games and noting the time in the clip when a goal was scored by the Habs or the opposing team.

Obviously, we’ll be using the Canadiens’ goals as positive examples for our classifier, since that is what we are trying to detect.

Now what about negative examples? If you think about it, the very worst thing that could happen to this system is for it to get false positives (falsely thinking there is a goal). Imagine we are playing against the Toronto Maple Leafs and they score a goal and the light show starts. Not only did we just get scored and are bummed out, but on top of that the algorithm is trolling us about it by playing our own goal song! (This is naturally a fictitious example because the Leafs are obviously not making the playoffs once again this year) To make sure that doesn’t happen, we’ll be using all the opposing team’s goals as explicit negatives. The hope is that the model will be able to distinguish between goals for and against because the commentator is much more enthusiastic for Canadiens’ goals.

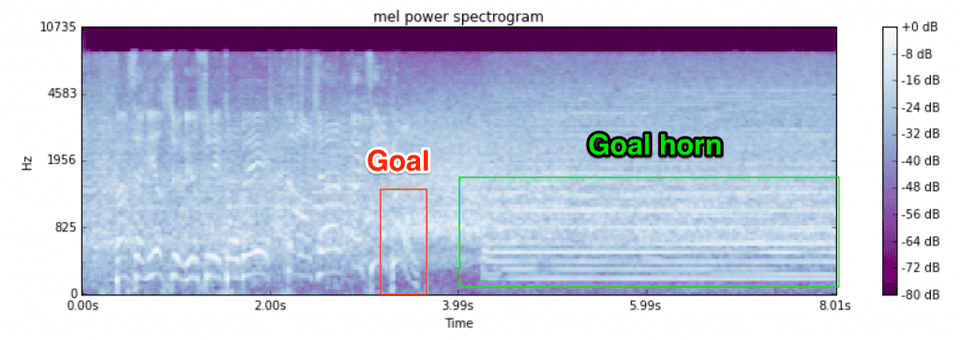

To illustrate this, compare the MSP of the Habs’ goal above with the example below of a goal against the Habs. The commentator’s scream is much shorter and the goal horn of the opponent’s team amphitheater is at very different frequencies than the one at the Bell Center. The goal horn only goes off when the home team scores so the MSP below is taken from a game not played in Montréal.

In addition to the opposing team’s goals, we’ll use 50 randomly selected segments from each highlight that are far enough from an actual goal as negatives, so that the model is exposed to what the uneventful portions of a game sound like.

False negatives (missing an actual goal) are still bad, but we prefer them over false positives. We’ll talk about how we can deal with them later on.

Note that I did not do any alignment of the sound files, meaning the commentator yelling does not start at exactly the same time in every clip. The dataset ended up consisting of 10 games, with 34 goals by the Habs and 17 goals against them. The randomly selected negative clips added another 500 examples.

Training and picking a classifier

As I mentioned earlier, the goal was to start simple. To that effect, the first models I tried were a simple logistic regression and an SVM with an rbf kernel over the raw vectorized MPS.

I was a bit surprised that this trivial approach yielded usable results. The logistic regression got an AUC of 0.97 and an F1 score of 0.63, while the SVM got an AUC of 0.98 and an F1 score of 0.71. Those results were obtained by holding out 20% of the training data to test on.

At this point I ran a few complete game broadcasts through the system and each time the model detected a goal, I wrote out the 2 seconds corresponding sound file to disk. A bunch were false positives that corresponded to commercials. The model had never seen commercials before because they are not included in game highlights. I added those false positives to the negative examples, retrained and the problem went away.

However the AUC/F1 score were not an accurate estimation of the performance I could expect because I was not necessarily planning to use a single prediction as the trigger for the light show. Since I’m scoring many times per second, I could try decision rules that would look at the last n predictions to make a decision.

I ran a 10-fold cross-validation, holding out an entire game from the training set, and actually stepping through the held out game’s highlight as if it was the real-time audio stream of a live game. That way I could test out multi-prediction decision rules.

I tried two decision rules:

- average of last n predictions over the threshold t

- m positive votes in the last n predictions, where a YES vote requires a prediction over the threshold t

For each combination of decision rule, hyper-parameters and classifier, there were 4 metrics I was looking at:

- Real Canadiens goal that the model detected (true positive)

- Opposing team goal that the model detected (really bad false positive)

- No goal but the model thought there was one (false positive)

- Canadiens goal the model did not detect (false negative)

SVMs ended up being able to get more true positives but did a worst job on false positives. What I ended up using was a logistic regression with the second decision rule. To trigger a goal, there needs to be 5 positives votes out of the last 20 and votes are cast if the probability of a goal is over 90%. The cross-validation results for that rule were 23 Habs goals detected, 11 not detected, 2 opposing team goals falsely detected and no other false positives.

Looking at the Habs’ 2014-15 season statistics, they scored an average of 2.61 goals per game and got scored 2.24 times. This means I can loosely expect the algorithm to not detect 1 Habs goal per game (0.84 to be more precise) and to go off for a goal by the opposing team once every 4 games.

Note that the trained model only works for the specific TV station and commentator I trained on. I trained on regular season games aired on TVA Sports because they are airing the playoffs. I tried testing on a few games aired on another station and basically detected no goals at all. This means performance is likely to go down if the commentator catches a cold.

Philips hue light show

Now that we’re able to do a reasonable job at identifying goals, it was time to create a light show that rivals those crazy Christmas ones we’ve all seen. This has 2 components: playing the Habs’ goal song and flashing the lights to the music.

The goal song I play is not the current one in use at the Bell Center, but the one that they used in the 2000s. It is called ”Le Goal Song” by the Montréal band L’Oreille Cassée. To the best of my knowledge, the song is not available for sale and is only available on Youtube.

Philips hues are smart LED multicolor lights that can be controlled using an iPhone app. The app talks to the hue bridge that is connected to your wifi network and the bridge talks to the lights over the ZigBee Light Link protocol. In my living room, I have the 3 starter-kit hue lights, a light-strip under my kitchen island and a Bloom pointing at the wall behind my TV. Hues are not specifically meant for light shows; I usually use them to create an interesting atmosphere in my living room.

I realized the lights can be controller using a REST API that runs on the bridge. Using the very effective phue library, we can interface with the hue bridge API from python. At that point, it was simply a question of programming a sequence of color and intensity calls that would roughly go along with the goal song I wanted to play.

Below is an example of using phue to make each light cycle through the colors blue, white and red 10 times.

| import time | |

| from phue import Bridge | |

| # connect to the hue bridge | |

| b = Bridge(bridge_ip) | |

| b.connect() | |

| # Setup colors | |

| colors = { | |

| "bleu": [0.1393, 0.0813], | |

| "blanc": [0.3062, 0.3151], | |

| "rouge": [0.674, 0.322] | |

| } | |

| colorKeys = colors.keys() | |

| # change each light color 10 times | |

| for cycle in xrange(10): | |

| for light in xrange(5): | |

| # on each cycle, each light goes to the next color, which is | |

| # either bleu, white or red. | |

| next_color = colors[colorKeys[(cycle + light) % 3]] | |

| b.set_light(light, 'xy', next_color, transitiontime=2.5) | |

| time.sleep(1) |

I deployed this up as a simple REST API using bottle. This way, the celebratory light show is decoupled from the trigger. The lights can be triggered easily by calling the /goal endpoint.

Hooking up to the live audio stream

My classifier was trained on audio clips offline. To make this whole thing come together, the missing piece was the real-time scoring of a live audio feed.

I’m running all of this on OSX and to get the live audio into my python program, I needed two components: Soundflower and pyaudio. Soundflower acts as a virtual audio device and allows audio to be passed between applications, while pyaudio is a library that can be used to play an record audio in python.

The way things need to be configured is the system audio is first set to the Soundflower virtual audio device. At that point, no sound will be heard because nothing is being sent to the output device. In python, you can then configure pyaudio to capture audio coming into the virtual audio device, process it, and then resend it out to the normal output device. In my case, that is the HDMI output going to the TV.

As you can see from the code snippet below, you start listening to the stream by giving pyaudio a callback function that will be called each time the captured frames buffer is full. In the callback, I add the frames to a ring buffer that keeps 2 seconds worth of audio, because that is the size of the training examples I used to train the model. The callback gets called many times per second. Each time, I take the contents of the ring buffer and score it using the classifier. When a goal is detected by the model, this triggers a REST call to the /goal endpoint of the light show API.

| import pyaudio | |

| import librosa | |

| import numpy as np | |

| import requests | |

| # ring buffer will keep the last 2 seconds worth of audio | |

| ringBuffer = RingBuffer(2 * 22050) | |

| def callback(in_data, frame_count, time_info, flag): | |

| audio_data = np.fromstring(in_data, dtype=np.float32) | |

| # we trained on audio with a sample rate of 22050 so we need to convert it | |

| audio_data = librosa.resample(audio_data, 44100, 22050) | |

| ringBuffer.extend(audio_data) | |

| # machine learning model takes wavform as input and | |

| # decides if the last 2 seconds of audio contains a goal | |

| if model.is_goal(ringBuffer.get()): | |

| # GOAL!! Trigger light show | |

| requests.get("http://127.0.0.1:8082/goal") | |

| return (in_data, pyaudio.paContinue) | |

| # function that finds the index of the Soundflower | |

| # input device and HDMI output device | |

| dev_indexes = findAudioDevices() | |

| stream = pa.open(format = pyaudio.paFloat32, | |

| channels = 1, | |

| rate = 44100, | |

| output = True, | |

| input = True, | |

| input_device_index = dev_indexes['input'], | |

| output_device_index = dev_indexes['output'], | |

| stream_callback = callback) | |

| # start the stream | |

| stream.start_stream() | |

| while stream.is_active(): | |

| sleep(0.25) | |

| stream.close() | |

| pa.terminate() |

Full architecture

My TV subscription allows me to stream the hockey games on a computer in HD. I hooked up a Mac Mini to my TV and that Mac will be responsible for running all the components of the system:

- displaying the game on the TV

- sending the game’s audio feed to the Soundflower virtual audio device

- running the python goal detector that capture the sound from Soundflower, analyses it, calls the goal endpoint if necessary and resends the audio out to the HDMI output

- running the light show API that listens for calls to the goal endpoint

Since the algorithm is not perfect, I also hooked up the Griffin USB button that I mentioned at the very beginning of the post. It can be used to either start or stop the light show in case we get a false negative or false positive respectively. It was very easy to do this because a push of the button simply calls the /goal endpoint of the API that can decide what it should do with the trigger.

Production results and beyond

After two playoff games against the Ottawa Senators, the model successfully detected 75% of the goals (missing 1 per game) and got no false positives. This is in line with the expected performance, and the USB button was there to save the day when the detection did not work.

This was done in a relatively short amount of time and represents the simplest approach at each step. To make this work better, there are a number of things that could be done. For instance, aligning the audio files of the positive examples, trying different example length, trying more powerful classifiers like a convolutional neural net, doing simple image analysis of the video feed to try to determine on which side of the ice we are, etc.

In the mean-time, enjoy the playoffs and Go Habs Go!

Media Coverage

Mini-Documentary: ESPN Fan Stories: Robot Fan Cave - April 2019

This awesome mini-doc was produced by our friends at Hodge Films for ESPN.

Talks

- PyCon Canada, Toronto - November 7, 2015

- Montréal Python, Montréal - November 23, 2015

In the media

- Best goal celebration ever? Hockey fan creates epic light show in his living room with machine-learning technology [Information Age]

- Canadiens fan creates ultimate goal celebration in living room [Yahoo! Sports]

- Canadiens fan rigs living room for world’s best living-room goal celebration [Washington Post]

- Canadiens Fan Creates Epic Goal Celebration Light Show in Living Room [bleacher report]

- Montreal Canadiens fan and computer scientist creates ultimate Habs light show [CBC News]

- François Maillet a conçu le système de lumières parfait pour célébrer les buts des Canadiens en séries [Huffington Post Québec - FR]

- Audio algorithm detects when your team scores [Hackaday]

- Top 10 Machine Learning Videos on YouTube [KDnuggets]

- Mordu du CH, il fait disjoncter son système d’éclairage [TVA Sports - FR vidéo]

- Hacker news

- Reddit: posted by me, posted by someone else

- Un spectacle de lumières pour chaque but du CH [Le messager de Verdun - FR]

- Philips Hue Sports Goal Celebration Light Show [Hue Home Lighting]

70 thoughts on "Hacking an epic NHL goal celebration with a hue light show and real-time machine learning"

Commented 2015-04-22 20:00:09

This is awesome! I really like your approach to this. I've been working on a similar setup for the Anaheim Ducks but using a Twitter feed watching certain keywords. (We tweet "#AnaheimDucksGoooaaalll" when they score.) I've been recording a search of related tweets to a database and updating a webpage graph for now. The plan is to hook it up to lights, an LED matrix, and one of those red goal lights. I agree about no "realtime" way to get it from the website. Go Habs and Ducks!

Commented 2015-04-22 16:16:01

Wow, this is really neat! Go Habs go!

Commented 2015-04-22 16:18:57

Great post. Do you have any more information on what tools/libraries you used to implement the machine learning model?

Cheers

Commented 2015-04-22 16:29:21

@Scott: logreg and svm in scikit learn for this initial version. Since what I did is really simple, I'm planning to improve the ML in the near future using <a href="http://mldb.ai" rel="nofollow">MLDB</a>, the ML database we're developing at <a href="http://datacratic.com" rel="nofollow">Datacratic</a>.

Commented 2015-04-22 16:30:46

@Alan: I don't have an experience with a raspberri pi. But the processing and scoring took about 50% of a core of a fairly recent i7, so not sure if it would be fast enough without spending more time optimizing things.

Commented 2015-04-22 16:42:25

I understand that it will also work if the announcer goes "Scores!" or "Et le but!"

And the algo will only get better as we make our way to the Stanley Cup!

Next step is to detect whenever Dale Weise scores and make a special celebration.

Go! Habs! Go!

Commented 2015-04-22 18:00:48

Awesome hack!! Any plans on open sourcing any of this project? I'd love to see how you did the machine learning/training.

Commented 2015-04-22 19:09:02

Would you be willing to put the code into one repository on Github?

Commented 2015-04-22 15:45:35

Instead of a Mac mini, would this be hackable into a raspberri pi?

Commented 2015-04-22 09:53:32

Thanks. Googled a bit. Saw ESPN used to have some sort of API but it's no longer available. Also checked IFTTT but didn't find anything that looked suitable.

Commented 2015-04-22 07:08:09

How did it went when the Habs were in ottawa?

Commented 2015-04-22 09:51:56

Got the two Habs goals but it did go off also for the Sens goal. But that's in line with the performance I was expecting, which is miss 1 habs goal per game and go off for an opposing team's goal once per 4 game.

Commented 2015-04-22 09:52:41

Gliffy Diagrams chrome plugin

Commented 2015-04-21 22:43:27

Your setup is awesome! I love it. Great work.

<cite>As far as I could find, there is no API or website available online that can give me reliable notifications within a second or two that a goal was scored. So how can we do it very quickly?</cite>

What APIs and/or websites have you checked ?

Commented 2015-04-22 00:16:19

What software did you use to generate the architectural diagram?

Commented 2015-04-23 16:40:23

Congratulations for your hack. On my side, I've tried the IFTTT app and the results weren't really there... I've made a recipe with ESPN and Hue channels, but there was a really big delay so it was pretty useless and the effect was not so cool.

Commented 2015-04-23 16:43:30

Bruins fan here, just gotta say this is incredible. Have always dreamed of having something like this in my living room, but I think the wife might not like it very much.

Commented 2015-04-23 16:44:39

@Kevin: Thanks! I've put code snippets in the blog post with the critical parts of it. The ML of this first version is very simple. Pretty vanilla scikit learn. I'm planning on doing a follow up with a focus on improving the ML.

Commented 2015-04-23 20:06:19

Wow, this is really cool. I can imagine the party. You could buy a Budweiser Red Light and reverse it... but I prefer your approach ;)

Commented 2015-04-22 21:39:23

@Kevin: It's really a kind of unique and hacky setup so it's not really open sourcable as is. I've put up most of the critical snippets in the blog post so that should get you more than started. I'm be happy to answer any questions

Commented 2015-04-22 22:01:11

Wow what a cool project! Très bien!

As a huge hockey fan (go Red Wings! We might be seeing you in the next round), this project immediately peaked my interest, and as a programmer, I was totally amazed by your project. Super cool on so many fronts! The machine learning aspect, the hardware integration. Wow! I would love to do a build for my team and see the red lights turn on when Datsyuk puts one in the net!

Encore, très magnifique et super intéressant!

Cheers,

Joe Gibson

Commented 2015-04-23 06:59:33

Great post, man. There was this startup a few years ago, that was trying to bring the hue lights to homes, but they've failed :( ... https://vimeo.com/77999940 but I think that they still have a functional and publci API.

Commented 2015-04-23 10:14:41

This is just absolutely fantastic! Might steal your idea and try to build something for the upcoming IIHF World Championship (must find a way to different the commentary for the different national teams though...).

Awesome work!

Commented 2015-04-23 13:25:16

That was a lot of work - I hope you enjoy it next season. Because it's not going to get much more work this year.....

Commented 2015-04-23 13:41:41

Poking around the web I saw the NHL has some webservices available but undocumented..

http://live.nhl.com/GameData/SeasonSchedule-20142015.json - list of games and game IDs

http://live.nhl.com/GameData/20142015/2014030114/gc/gcsb.jsonp - information for a specific game. Replace 20142014 in the URL with the season and 2014030114 with the game ID for the game you want to look at. Not sure how real-time it is. :)

Commented 2016-03-15 14:56:28

Hey- this is awesome. I have a question. I'd have to do this through the button press by the sounds of it rather than voice (for baseball, football etc)

1) how does the computer know to cue the celebration by the usb button tap as you originally did it? Is there a software or something locally on the computer telling the media player with the goal horn plus hue to cue it up? I'm just unsure about how this part works.

Thanks!

Commented 2015-05-04 16:28:55

There are 2 things that are too bad - 1) You use a Sens vs Habs game for demo. Go Sens Go! 2) Too bad PyCon 2016 isn't in Montréal. It would've been a great talk if you could demo there. They're in Portland next year, afaik.

Commented 2015-05-04 19:02:01

Hey François, awesome set up you created! Watching that demo video never gets old.

I'll have to admit, I'm really intrigued in how you're able to play the theme song through the media player to the stereo. I only know of media players receiving bluetooth. What type of media player do you use? And are you actually receiving the REST call or sending it through bluetooth?

Commented 2015-04-25 08:44:31

@Neil: We do have the score display. However the displayed score doesn't change within a second of a goal. Some stations have an animation and write "GOAL!!" but then you still need to figure out who scored. I'm considering improving the accuracy of the system by doing both audio and video analysis but audio seemed like an easier first step.

Commented 2016-03-24 19:26:37

@Derek: You can get software from Griffin for the Powermate that essentially allows you to specify what to do when the button is pressed. I had the button run an AppleScript that did two things: a) run an 'open' shell command on the goal's song MP3 file telling it to open it up with VLC b) do a REST call to my goal API to start the lights.

If you only want to start a song, then maybe there's an even simpler way in the PowerMate's UI to do that, without using an Apple Script.

Cheers!

Commented 2016-03-24 21:51:35

Francois I have the python program tied into my lights, they are changing the colors and flashing, how did you set it to find the starting state of the light and then return to that state after the program is ran?

Commented 2016-03-25 10:56:27

@Kyle: Take a look at the phue examples: https://github.com/studioimaginaire/phue. You just have to store what the color and brightness settings for every light is before starting flashing the lights. Once you're done, just set them back to what they were.

Commented 2016-03-25 11:50:33

I looked at those, they helped me get it set up, but am unsure on the specific code i would need to write, I tried to retrieve the status using the b.get_light command but it is not returning a value. I can use b.set_light and set the light to on/off or color i choose. Also I am using transistiontime=0 but it is not 0, there is a delay. I want them to flash red, then to white, then alternate between white and red. during the change from red to white the turn blue then white, and from white to red the turn pink then red. Any ideas? I can send you the full code i have if you need. If you want to email me directly my email is kbostick88 at gmail. Thanks!

Commented 2016-10-22 11:17:05

@Colin: Glad to hear to made something similar inspired by this post! Was it also for hockey or some other use-case?? Anything you can share?

Good idea for the random noise. That certainly would help in terms of expanding's the model's view of what's possible and show it that vast areas of the feature space where it's all negative. To achieve something a bit similar, since I had access to many recordings of games, I ran a bunch of them though the system at high speed and manually looked at the examples that got considered as positives, and added the bad ones to the negative examples. That took care of most of what didn't sound similar to a goal, leaving false positives that actually sounded like goals (like goals for the wrong team).

Cheers!

Commented 2016-12-09 15:10:15

Hi there. As a fellow hockey fan I love what you've done here. I've got a question for you.....I'm not at all familiar with programming but I am somewhat savvy with audio/video wiring etc. I'm interested in building something similar to what you made, but by using the triggered USB button or a remote control that you initially eluded to in your post.

I want to be able to push a trigger button when my team scores and have a goal horn and song play over my speakers. I have the goal horn and song in an mp3 format. The trick is I'd also like the trigger button to power on siren lights I have as well. Is there something out there that would achieve this? The USB trigger button that you initially described, what does that have to connect to that would make this work? I appreciate any help/suggestions. You can e-mail me at ddonjon@hotmail.com.

-Derek

Commented 2017-01-24 09:24:54

How easy is this to implement for someone without any programming experience? I am mainly looking to be able to press a button when my team scores and have the light show play as well as a goal horn sound play though my speakers. It would be perfect if you created an install that could be used on a PC and is triggered when a USB button was pressed. I would pay for something like this.

Commented 2016-10-20 18:50:56

Thanks for the idea! I've made a similar listening script / training algorithm because of this blog. I've talked around as well and for the false positives, I think you can mitigate them quite a bit by including just random noise in your negative data set. For my training, I simply made a handful of WAVs that came from numpy's random and I haven't seen a false positive since. Might be worth trying, in case you haven't already.

Commented 2016-10-22 17:31:04

Good ideas! The project was to basically log how often my wife's cat meows. He's really annoying and I want to quantify just how annoying he is when he wakes us up in the middle of the night :-)

Thanks again for the idea though! It was a good learning exercise for an ML project and I really enjoyed learning more about the MFC in audio processing. I'd always wondered how services like Shazaam worked and now I think I have a pretty good understanding.

Commented 2019-03-30 20:44:10

Hi, I am trying something similiar but need advice as I do not have any electronics knowledge. I am using Home Assistant, and have hard wired a Wemos D1 mini to the goal light speaker wire. It worked as an automation trigger for about 30 minutes then fried the D1. Apparently I need an opto isolator but these seems to be only for Arduinos. I now have tried a schlage z wave door sensor as it has a 2 wire block, but I get "interference" I think because the signal is several seconds instead of just a momentary type switch. I'd like to solder to the pin outs instead of the bare wires, any suggestions about reducing the voltage, combining the 2 circuits OR what pinouts I could use besides the bare audio wires?

Commented 2017-02-17 05:38:29

You can avoid the resampling step, by giving pyaudio (or pysoundcard, which succeeds pyaudio) the audio settings you want directly.

@Michael - having something like this happen when you press a button would be very straightforward and make a good learning project, start with being able to play a sound, then move to working on getting it working when pressing a button.

Commented 2015-05-22 17:55:27

This video inspired me to spend hundreds of dollars on hue lights and I absolutely love them. My question is: What app have you found works best for programming the light sequence and animations of the lights themselves?

The stock hue app is rather clunky and there are too many third party apps to choose from.

Thanks !

Commented 2017-08-05 11:09:38

The script triggered by pressing the griffin USB should start both the light show and the music at the same time. In my setup, the USB button ran the exact same script as the automatic trigger from the audio stream. That script did a REST call to the light show API and started VLC with the goal song. Hope this helps!

Commented 2017-02-07 12:38:53

I see you did this for the playoffs last season but I have to wonder, did you do anything to deal with nationally syndicated broadcasts? In my area, after the first round all other playoff games are broadcast (and therefore called) by NBC's national broadcast team instead of the local broadcast team. On those national broadcasts, have you worked out a way to differentiate between which team scores? The play-by-play guy (on the east coast it's generally Doc Emerick for NBC) doesn't differentiate goal calls for either team. How would you factor this?

Commented 2017-06-20 12:41:47

How would one go about making this work for just pressing the Griffin USB?

I have read your article a few times and I am confident I understand how to program the light show. However, I am not sure how you would go about syncing that with an audio clip since I would not be running it with live stream.

Any help or tips to get me in the right direction would be appreciated!

Thank you!

Commented 2015-04-24 08:40:00

Nice work! I'm guessing that data from Habs-Sens games are probably the hardest to train with... Down here in Washington we have a lot of traitors - ugh, I mean transplants - so regular season opposition goals can be nearly as distinct as home team goals... less so in the playoffs, of course (WE ARE LOUDER!).

How are your results if you train on more of those goals where you let more of the other team's fans in the building?

Commented 2015-04-24 08:17:06

This is awesome! I'm curious why you went with processing the audio feed, rather than the video? Does Canadian hockey not have the score display in a fixed position on the screen? That would make detecting off a goal much more deterministic.

Commented 2015-04-24 04:13:25

Great stuff! We think this is pretty impressive also, so we have given you a little mention on the hue developers portal.

<a href="http://www.developers.meethue.com/" rel="nofollow">http://www.developers.meethue.com/</a>

Commented 2015-05-03 07:36:19

@Clod: The training was done with games aired at TVA because they're airing the playoffs. But I tried the system on an RDS game before the season ended. The model did not detect a single Habs goal even though there were 3 in the game if memory serves. The model locks on the TVA Sports commentator's voice. It's not about just triggering with there's a loud sound; the model learned what frequency pattern represents that specific commentator yelling "Goalll!!". Another commentator's voice will have a different timbre, so it'll sound different and look different to the model. So it's a very simple voice recognition system that only detect "He scores!" or "Goal".

The only way to make it work for other stations would be to create a dataset for those station's commentator. However, if you take CBC for instance, I'm pretty sure there is less of a difference between a goal for or against the Habs, especially if they're playing against another Canadian team. The fact that the francophone stations are super biased towards the Habs probably makes it much easier for the model!

As for the Als, let's start by winning the cup and we'll see about football after :) Thanks for your comment.

Commented 2015-04-28 20:05:39

@Alex: For sure! I did a variation on that without the button. I would play back past games in their entirety and capture every goal the system detected. Then it only a few minutes to put them in the positive or negative list. That's the way I captured false positives in commercials and intermissions

Commented 2015-04-28 13:53:02

I'm not sure how the USB trigger works, but could you potentially record the instances of mis-classification and add them back into your training set? Then you would be improving the accuracy of the model after each game, with the model having you act as an expert witness.

Commented 2015-08-25 20:20:11

@Guus: Take a look at this gist: https://gist.github.com/mailletf/3484932dd29d62b36092#file-gistfile1-py. The MPS is in the log_S variable on line 13. Vectorizing it simply means taking every row of that matrix and concatenating them to form a very long feature vector. You can do that this way: log_S.reshape(-1). Look at the numpy doc on reshape if you want more details.

Commented 2015-08-25 04:12:05

Thanks for this great article!

I am trying to detect when a cup of coffee has been made by listening to the sound of the coffee machine and using machine learning. Your article looks very close to what I want to accomplish.

After reading this article I still have a few questions I hope you can help me out with:

How did you feed the audio signal to the ML algorithm?

Quote: "So I decided to simply vectorize the MPS ...". What does this mean?

Is the source code available somewhere? (I looked on your GitHub and BitBucket.) This could answer a lot of my questions.

Your article is very interesting for my case. Unfortionalty after giving some sample code for how to generate an MPS diagram it skips over some details I really would like to know. Hopefully you can help me out :)

Thanks in advance!

Commented 2015-05-02 08:29:09

"This is naturally a fictitious example because the Leafs are obviously not making the playoffs once again this year"

hahahhaha :)

Just before "Philips hue light show", you said you tried with a different TV station.

Can you describe a little here?

Which one(s)? Only with habs or with a different club?

Did you try TSN with Leafs for example?

Can a Calgary fan uses that with CBC for example?

Really nice stuff Francois! Are you gonna do the same for the Als? :)

Commented 2015-04-21 13:04:41

Excellent implementation, congratulations

Commented 2015-05-25 12:31:25

@Kevin: The lights animatino is custom python code that uses the phue library. The blog post has a code snippet illustrating it.

Commented 2015-05-07 19:44:48

Nice project!

I've seen no mention of the Budweiser project, similar to this but probably powered by a not-so-cool technology.

CBC broadcasts over the air free signal and they provide live closed captions. TVA Sports must be doing that too (powered by speech recognition made in Montreal!). If the delay is too long with the closed captions, they could certainly be used to terminate FA sooner, not to mention they could provide description of the match that could help if you do some NLP and try to determine who has the puck!

The latency is probably better with the analysis of the audio but all of this is triggered by someone manually pushing a button. You could probably augment this system if you rely on the graphics broadcasters like CBC shows. These are very expensive to create and they follow a well determined procedure when a goal is scored.

Go Canadiens Go!

Commented 2015-05-07 08:09:05

@Ethan: Simpler than what you mentioned because I'm streaming the game on a Mac Mini hoked up to my home theater receiver using HDMI. So playing the goal song is done by having VLC on the mac simply play the song. I adjusted the volumes between the song and the game's feed so they were roughly well calibrated and voilà.

Commented 2016-12-29 11:28:23

Just a thought here, but could you add a variable to the machine learning for home vs away? You could get that data from a number of different sources.

At home games, the goal horn always sounds which could help increase the positive hits drastically.(home game + "goal" + goal horn = good goal) At away games, the sound of the goal horn could decrease the negative events as well. (Away game + "goal" + goal horn = bad goal) Also on away games "goal + no goal horn = good goal" could also help increase the positive hits.

Commented 2018-04-20 15:17:23

Hi, I have been looking for this solution for years! Can I buy a set up from you? Very anxious to do this as soon as possible please! I have a substantial amount of smart devices, over 50 hue lights, just started using openhab, but I'm not married to anything, just want this working like yours for Jets games.

Commented 2020-02-07 01:15:04

What you have done with this is awesome. I have a whole Hue setup and am jealous. Maybe someday. Lets go Flyers.

I am trying to work on a similar but far far less complex project. Not related to hue. Full disclosure, I am ok on the computer but have zero coding experience.. wondering if you do read this that you could at least point me in the right direction of how you would attack it. (software, hardware, etc). and I'm on Windows, not Mac.

I want to run a Sega Genesis emulator on a PC or Raspberry pi(NHL 94 specifically). When the home team scores in the game, its essentially the exact same sound byte that plays for about 2 to 3 seconds. I would like the PC or Pi to have something running in the background listening for that byte at all times, and when a goal is scored, it would trigger the PC or PI to transmit a stored IR signal to a NHL Fan Fever Goal Light that I already own.(https://www.amazon.com/Goal-Light-Horn-Team-Labels/dp/B009P9Y4JA). I have isolated the short sound byte in wav and mp3 format.

Any thoughts or input would be greatly appreciated. Thx for reading

Commented 2021-10-16 07:54:49

hi,

thx for sharing! I have a question:

in your code, where does the class "RingBuffer" come from?

Commented 2022-10-17 09:40:20

I have a suggestion / comment about this part:

"Note that the trained model only works for the specific TV station and commentator I trained on. I trained on regular season games aired on TVA Sports because they are airing the playoffs. I tried testing on a few games aired on another station and basically detected no goals at all. This means performance is likely to go down if the commentator catches a cold."

Would it be possible to have the program simultaneously check an API as a backup method for detecting a true positive in case the commentator did in fact have a cold? I understand that using this backup method may cause delayed responses, but wouldn't that be better than no response at all?

Commented 2020-07-27 16:06:49

You're my hero. And I hate the Habs. Awesome work.

Go Stars.

Commented 2021-12-21 18:24:54

Thanks for this! A few minor changes needed to the code to display the MPS of a sound file

1. replace `import librosa` with `import librosa.display`

2. librosa.logamplitude() has been removed. Replace that line with:

`log_S = librosa.power_to_db(S, ref=np.max)`

3. (For people using PyCharm) at the bottom of the file add `plt.show()`